Texas Attorney General Reaches Settlement with AI Healthcare Firm

The intersection of artificial intelligence (AI) and healthcare has been a burgeoning field, promising to revolutionize patient care, diagnostics, and operational efficiencies. However, as with any rapidly advancing technology, it brings with it a host of legal and ethical challenges. Recently, the Texas Attorney General reached a significant settlement with an AI healthcare firm, highlighting the complexities and regulatory hurdles in this domain. This article delves into the details of the settlement, the implications for the healthcare industry, and the broader context of AI in healthcare.

Background of the Settlement

The settlement between the Texas Attorney General and the AI healthcare firm marks a pivotal moment in the regulation of AI technologies in the medical field. The firm in question, which had been at the forefront of developing AI-driven diagnostic tools, faced allegations of misleading marketing practices and data privacy violations. The Attorney General’s office argued that the firm overstated the capabilities of its AI systems, potentially endangering patient safety and violating consumer protection laws.

The settlement, which includes a substantial financial penalty and a commitment to overhaul the firm’s marketing and data handling practices, underscores the importance of transparency and accountability in AI applications. It also sets a precedent for how similar cases might be handled in the future, as regulators strive to balance innovation with consumer protection.

Key elements of the settlement include:

- A financial penalty of $10 million to be paid by the firm.

- Mandatory revisions to marketing materials to accurately reflect the capabilities and limitations of AI tools.

- Implementation of robust data privacy measures to protect patient information.

- Regular audits by an independent third party to ensure compliance with the settlement terms.

This section will explore the background of the settlement in greater detail, examining the specific allegations, the firm’s response, and the legal framework governing AI in healthcare.

Allegations and Legal Proceedings

The allegations against the AI healthcare firm centered on two primary issues: misleading marketing practices and inadequate data privacy protections. The Attorney General’s office claimed that the firm exaggerated the accuracy and reliability of its AI diagnostic tools, leading healthcare providers and patients to place undue trust in the technology. This, in turn, could have resulted in misdiagnoses or inappropriate treatment plans.

Furthermore, the firm was accused of failing to implement adequate safeguards to protect patient data. In an era where data breaches and cyber threats are increasingly common, the protection of sensitive health information is paramount. The firm’s alleged lapses in this area raised significant concerns about patient privacy and data security.

The legal proceedings were closely watched by industry experts and regulators, as they highlighted the challenges of applying existing consumer protection and privacy laws to emerging technologies. The case underscored the need for clear guidelines and standards to govern the use of AI in healthcare, ensuring that innovation does not come at the expense of patient safety and privacy.

The Firm’s Response and Compliance Measures

In response to the allegations, the AI healthcare firm initially defended its practices, arguing that its marketing materials were based on rigorous testing and validation of its AI tools. However, as the legal proceedings unfolded, the firm agreed to settle the case, acknowledging the need for greater transparency and accountability.

As part of the settlement, the firm committed to a comprehensive review and revision of its marketing materials. This included providing clear and accurate information about the capabilities and limitations of its AI systems, as well as the potential risks and benefits for patients and healthcare providers.

In addition to marketing revisions, the firm agreed to implement enhanced data privacy measures. This involved adopting industry best practices for data encryption, access controls, and breach detection, as well as conducting regular audits to ensure compliance with privacy regulations.

The firm’s response and compliance measures serve as a valuable case study for other companies operating in the AI healthcare space. By prioritizing transparency and data protection, firms can build trust with consumers and regulators, paving the way for sustainable growth and innovation.

Implications for the Healthcare Industry

The settlement between the Texas Attorney General and the AI healthcare firm has far-reaching implications for the healthcare industry. As AI technologies become increasingly integrated into medical practice, healthcare providers, technology developers, and regulators must navigate a complex landscape of ethical, legal, and operational challenges.

This section will explore the broader implications of the settlement, focusing on the impact on healthcare providers, the role of AI in patient care, and the regulatory landscape for AI technologies.

Impact on Healthcare Providers

For healthcare providers, the settlement serves as a reminder of the importance of due diligence when adopting new technologies. While AI offers significant potential to enhance diagnostic accuracy and streamline operations, providers must critically evaluate the tools they use, ensuring that they are based on sound scientific evidence and comply with regulatory standards.

The settlement also highlights the need for ongoing education and training for healthcare professionals. As AI tools become more prevalent, providers must be equipped with the knowledge and skills to effectively integrate these technologies into their practice. This includes understanding the limitations of AI systems, interpreting AI-generated insights, and making informed decisions based on a combination of AI and clinical expertise.

Moreover, healthcare providers must prioritize patient communication and consent when using AI tools. Patients have a right to understand how their data is being used and the role of AI in their care. By fostering open and transparent communication, providers can build trust with patients and ensure that AI technologies are used ethically and responsibly.

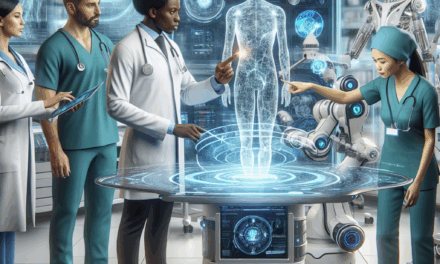

The Role of AI in Patient Care

The settlement raises important questions about the role of AI in patient care. While AI has the potential to transform healthcare delivery, it is essential to recognize that AI systems are not infallible. They are tools that can augment, but not replace, human judgment and expertise.

AI technologies can enhance diagnostic accuracy by analyzing large volumes of data and identifying patterns that may be missed by human clinicians. However, they are only as good as the data they are trained on and the algorithms that power them. Biases in data or algorithm design can lead to inaccurate or inequitable outcomes, underscoring the need for rigorous testing and validation.

Furthermore, the integration of AI into patient care must be guided by ethical principles. This includes ensuring that AI tools are used to enhance, rather than undermine, patient autonomy and dignity. Healthcare providers must be vigilant in monitoring the impact of AI on patient outcomes and be prepared to intervene if AI-generated recommendations conflict with clinical judgment or patient preferences.

Regulatory Landscape for AI Technologies

The settlement highlights the evolving regulatory landscape for AI technologies in healthcare. As AI becomes more prevalent, regulators face the challenge of developing frameworks that balance innovation with consumer protection and ethical considerations.

In the United States, the Food and Drug Administration (FDA) plays a key role in regulating AI-based medical devices. The FDA has issued guidance on the development and evaluation of AI technologies, emphasizing the importance of transparency, accountability, and patient safety. However, the rapid pace of technological advancement presents ongoing challenges for regulators, who must adapt their frameworks to keep pace with innovation.

At the state level, attorneys general and consumer protection agencies are increasingly involved in overseeing AI technologies. The Texas settlement serves as a precedent for how state regulators can address issues related to misleading marketing and data privacy in the AI healthcare space.

Internationally, regulatory approaches to AI in healthcare vary widely. The European Union has taken a proactive stance, with the proposed Artificial Intelligence Act aiming to establish comprehensive rules for AI systems. Other countries are also exploring regulatory frameworks to address the unique challenges posed by AI in healthcare.

Ethical Considerations in AI Healthcare

The integration of AI into healthcare raises a host of ethical considerations that must be carefully navigated to ensure that these technologies are used responsibly and equitably. The settlement between the Texas Attorney General and the AI healthcare firm brings these ethical issues to the forefront, highlighting the need for a thoughtful and principled approach to AI in healthcare.

This section will explore key ethical considerations in AI healthcare, including issues of bias and fairness, patient autonomy and consent, and the broader societal implications of AI technologies.

Bias and Fairness in AI Systems

One of the most pressing ethical concerns in AI healthcare is the potential for bias in AI systems. Bias can arise from a variety of sources, including biased training data, algorithmic design choices, and the interpretation of AI-generated insights. If left unaddressed, bias can lead to inequitable outcomes, disproportionately affecting marginalized or underserved populations.

For example, if an AI diagnostic tool is trained on data that predominantly represents a specific demographic group, it may not perform as accurately for individuals from other groups. This can result in misdiagnoses or suboptimal treatment recommendations, exacerbating existing health disparities.

To address these concerns, developers of AI healthcare technologies must prioritize fairness and inclusivity in their design and testing processes. This includes using diverse and representative datasets, conducting bias audits, and engaging with stakeholders from diverse backgrounds to ensure that AI systems are equitable and accessible to all patients.

Patient Autonomy and Informed Consent

AI technologies have the potential to enhance patient care, but they also raise important questions about patient autonomy and informed consent. Patients have a right to understand how AI tools are being used in their care and to make informed decisions about their treatment options.

Healthcare providers must ensure that patients are fully informed about the role of AI in their care, including the potential benefits and limitations of AI-generated insights. This requires clear and transparent communication, as well as opportunities for patients to ask questions and express their preferences.

Informed consent is particularly important when it comes to data privacy and security. Patients must be aware of how their data is being collected, used, and shared, and they should have the ability to opt out of data sharing if they choose. By prioritizing patient autonomy and informed consent, healthcare providers can build trust with patients and ensure that AI technologies are used ethically and responsibly.

Societal Implications of AI in Healthcare

The integration of AI into healthcare has broader societal implications that must be carefully considered. While AI has the potential to improve healthcare delivery and outcomes, it also raises questions about the future of work, the role of human clinicians, and the distribution of healthcare resources.

As AI technologies become more prevalent, there is a risk that they could displace certain roles within the healthcare workforce. While AI can automate routine tasks and enhance efficiency, it is essential to recognize the value of human expertise and empathy in patient care. Healthcare providers must be prepared to adapt to changing roles and responsibilities, ensuring that AI is used to complement, rather than replace, human clinicians.

Additionally, the widespread adoption of AI in healthcare could exacerbate existing disparities in access to care. If AI technologies are primarily available to well-resourced healthcare systems or affluent populations, they could widen the gap between those who have access to cutting-edge care and those who do not. Policymakers and healthcare leaders must work to ensure that AI technologies are accessible and affordable for all patients, regardless of their socioeconomic status or geographic location.

Case Studies and Real-World Examples

To better understand the implications of AI in healthcare and the lessons learned from the Texas settlement, it is helpful to examine real-world case studies and examples of AI technologies in action. These case studies provide valuable insights into the challenges and opportunities associated with AI in healthcare, as well as best practices for ensuring ethical and responsible use.

This section will explore several case studies of AI healthcare technologies, highlighting their impact on patient care, the challenges they faced, and the lessons learned from their implementation.

AI in Radiology: Enhancing Diagnostic Accuracy

One of the most promising applications of AI in healthcare is in the field of radiology. AI algorithms have been developed to analyze medical images, such as X-rays, CT scans, and MRIs, with the goal of enhancing diagnostic accuracy and efficiency.

For example, a study conducted by researchers at Stanford University demonstrated that an AI algorithm could accurately detect pneumonia from chest X-rays with a level of accuracy comparable to that of expert radiologists. The algorithm was trained on a large dataset of labeled images and used deep learning techniques to identify patterns indicative of pneumonia.

The use of AI in radiology has the potential to improve patient outcomes by enabling earlier and more accurate diagnoses. However, it also raises important questions about the role of human radiologists and the need for oversight and validation of AI-generated insights. Radiologists must be prepared to work alongside AI tools, using their clinical expertise to interpret AI-generated findings and make informed decisions about patient care.

AI in Personalized Medicine: Tailoring Treatment Plans

AI technologies are also being used to advance personalized medicine, which aims to tailor treatment plans to individual patients based on their unique genetic, environmental, and lifestyle factors. By analyzing large datasets of patient information, AI algorithms can identify patterns and correlations that may inform treatment decisions.

For example, an AI platform developed by IBM Watson Health has been used to analyze genomic data and recommend personalized treatment plans for cancer patients. The platform uses natural language processing and machine learning techniques to interpret scientific literature and clinical trial data, providing oncologists with evidence-based recommendations for targeted therapies.

While AI has the potential to revolutionize personalized medicine, it also presents challenges related to data privacy, consent, and the interpretation of complex genetic information. Healthcare providers must ensure that patients are fully informed about the use of AI in their care and that they have access to genetic counseling and support services.

AI in Predictive Analytics: Anticipating Patient Needs

Predictive analytics is another area where AI is making a significant impact in healthcare. By analyzing historical data and identifying trends, AI algorithms can predict patient needs and outcomes, enabling proactive and preventive care.

For example, a hospital in New York implemented an AI-based predictive analytics system to identify patients at risk of sepsis, a life-threatening condition caused by infection. The system analyzed electronic health records and vital signs data to identify early warning signs of sepsis, allowing clinicians to intervene before the condition became critical.

The use of predictive analytics in healthcare has the potential to improve patient outcomes and reduce healthcare costs by preventing adverse events and hospital readmissions. However, it also raises questions about data accuracy, algorithm transparency, and the potential for over-reliance on AI-generated predictions. Healthcare providers must ensure that predictive analytics tools are used as part of a comprehensive care strategy, with human clinicians playing a central role in decision-making.

Conclusion: Key Takeaways and Future Directions

The settlement between the Texas Attorney General and the AI healthcare firm serves as a pivotal moment in the regulation and ethical use of AI technologies in healthcare. It highlights the importance of transparency, accountability, and patient protection in the development and deployment of AI tools.

As AI continues to transform healthcare, stakeholders must navigate a complex landscape of ethical, legal, and operational challenges. Healthcare providers must prioritize due diligence, patient communication, and informed consent when adopting AI technologies. Developers must focus on fairness, inclusivity, and data privacy in their design and testing processes. Regulators must adapt their frameworks to keep pace with innovation while ensuring consumer protection and ethical considerations.

Looking ahead, the future of AI in healthcare holds immense promise, but it also requires careful stewardship to ensure that these technologies are used responsibly and equitably. By fostering collaboration among healthcare providers, technology developers, regulators, and patients, we can harness the potential of AI to improve patient care and outcomes while upholding the highest ethical standards.