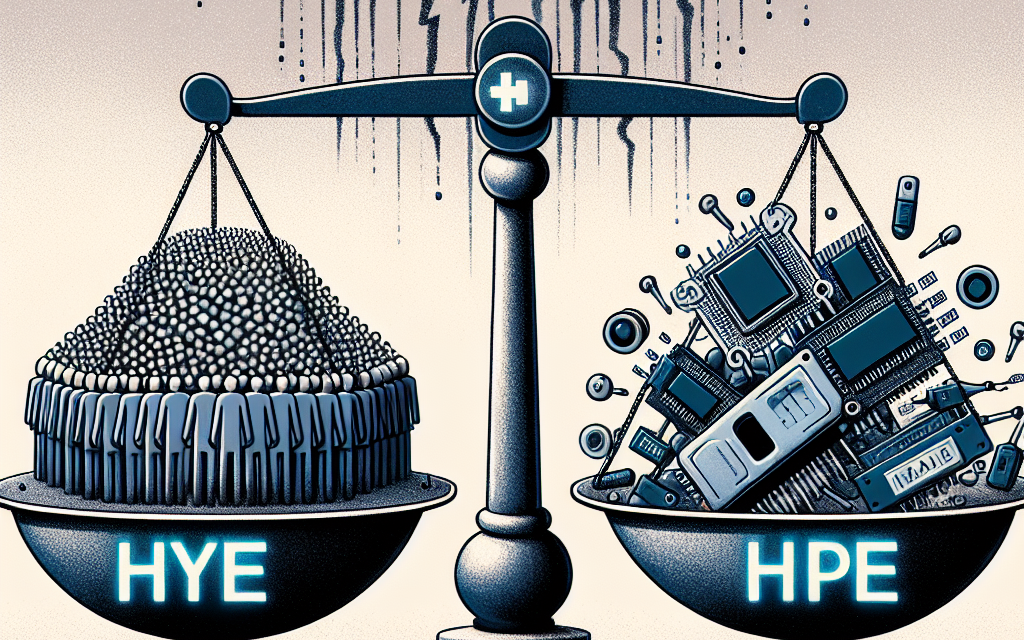

Prioritize Healthcare AI: Experts Warn Against Hype

The integration of artificial intelligence (AI) into healthcare has been heralded as a transformative force, promising to enhance patient outcomes, streamline operations, and reduce costs. However, as the excitement around AI grows, so does the caution from experts who warn against the hype surrounding its capabilities. This article delves into the complexities of healthcare AI, emphasizing the need for a balanced approach that prioritizes realistic expectations and ethical considerations. We will explore five critical subtopics: the current state of healthcare AI, the risks of overhyping AI, ethical implications, case studies of successful AI implementations, and the future of AI in healthcare.

The Current State of Healthcare AI

Healthcare AI is not a futuristic concept; it is already being utilized in various capacities across the industry. From diagnostic tools to administrative support, AI technologies are making their mark. However, understanding the current landscape is essential for stakeholders to make informed decisions.

AI applications in healthcare can be broadly categorized into three areas:

- Clinical Applications: These include diagnostic algorithms, predictive analytics, and personalized medicine. For instance, AI systems like IBM Watson Health have been used to analyze medical literature and assist in treatment recommendations.

- Operational Efficiency: AI is also being deployed to streamline administrative tasks, such as scheduling, billing, and patient management. Tools like Olive AI automate repetitive tasks, allowing healthcare professionals to focus on patient care.

- Patient Engagement: AI-driven chatbots and virtual health assistants are enhancing patient engagement by providing timely information and support. For example, the chatbot developed by Buoy Health helps patients navigate their symptoms and find appropriate care.

Despite these advancements, the adoption of AI in healthcare is not uniform. A report by the McKinsey Global Institute indicates that while 50% of healthcare executives believe AI will significantly impact their organizations, only 20% have implemented AI solutions. This disparity highlights the challenges of integrating AI into existing systems, including data interoperability, regulatory hurdles, and the need for workforce training.

Moreover, the effectiveness of AI tools often depends on the quality and quantity of data available. Many healthcare organizations struggle with data silos, which can hinder the development of robust AI models. As a result, while some AI applications show promise, others may fall short of expectations due to inadequate data or lack of clinical validation.

The Risks of Overhyping AI

The excitement surrounding AI in healthcare can lead to unrealistic expectations. Experts caution that overhyping AI can result in disillusionment and mistrust among healthcare professionals and patients alike. Understanding the limitations of AI is crucial for its successful integration into healthcare systems.

One significant risk of overhyping AI is the potential for misdiagnosis or inappropriate treatment recommendations. For example, an AI algorithm trained on biased data may produce skewed results, leading to disparities in care. A study published in the journal *Nature* found that AI systems used for skin cancer detection performed poorly on patients with darker skin tones, highlighting the importance of diverse training datasets.

Additionally, the reliance on AI can lead to a reduction in critical thinking among healthcare professionals. If clinicians become overly dependent on AI recommendations, they may overlook important clinical nuances that the technology cannot capture. This phenomenon, known as “automation bias,” can compromise patient safety and care quality.

Furthermore, the rapid pace of AI development can outstrip regulatory frameworks, leading to potential safety and efficacy concerns. The U.S. Food and Drug Administration (FDA) has begun to establish guidelines for AI in healthcare, but the regulatory landscape remains complex and evolving. Without proper oversight, there is a risk that subpar AI solutions could enter the market, jeopardizing patient safety.

To mitigate these risks, experts recommend a cautious approach to AI adoption, emphasizing the importance of clinical validation, transparency, and ongoing monitoring of AI systems. By setting realistic expectations and prioritizing patient safety, healthcare organizations can harness the benefits of AI while minimizing potential pitfalls.

Ethical Implications of Healthcare AI

The integration of AI into healthcare raises significant ethical questions that must be addressed to ensure responsible use. As AI systems become more prevalent, stakeholders must consider issues related to privacy, bias, accountability, and informed consent.

One of the primary ethical concerns surrounding healthcare AI is patient privacy. AI systems often require access to vast amounts of personal health data to function effectively. This raises questions about data security and the potential for breaches. The Health Insurance Portability and Accountability Act (HIPAA) sets strict guidelines for patient data protection, but the rapid evolution of technology can outpace existing regulations.

Bias in AI algorithms is another critical ethical issue. If AI systems are trained on biased datasets, they may perpetuate existing health disparities. For instance, a study by the American Medical Association found that algorithms used in healthcare disproportionately favored white patients over Black patients in terms of risk assessment for certain conditions. This highlights the need for diverse and representative data in AI training processes.

Accountability is also a significant concern. When an AI system makes a mistake, it can be challenging to determine who is responsible. Is it the developers of the algorithm, the healthcare providers who relied on it, or the institutions that implemented it? Establishing clear lines of accountability is essential to ensure that patients receive safe and effective care.

Informed consent is another area that requires careful consideration. Patients must be made aware of how their data will be used and the role of AI in their care. Transparency in AI decision-making processes is crucial to building trust between patients and healthcare providers.

To address these ethical implications, healthcare organizations should establish ethical guidelines for AI use, prioritize diversity in data collection, and engage patients in discussions about AI’s role in their care. By fostering an ethical framework, stakeholders can ensure that AI is used responsibly and equitably in healthcare.

Case Studies of Successful AI Implementations

While the challenges of healthcare AI are significant, there are also numerous examples of successful implementations that demonstrate its potential to improve patient care and operational efficiency. These case studies provide valuable insights into best practices and lessons learned.

One notable example is the use of AI in radiology. A study published in *JAMA Network Open* found that an AI algorithm developed by Google Health outperformed human radiologists in detecting breast cancer in mammograms. The algorithm reduced false positives and false negatives, leading to improved diagnostic accuracy. This case highlights the potential of AI to enhance clinical decision-making and improve patient outcomes.

Another successful implementation can be seen in the use of AI for predicting patient deterioration. The University of California, San Francisco (UCSF) developed an AI model that analyzes electronic health records (EHR) to identify patients at risk of sepsis. By flagging high-risk patients early, the model has enabled healthcare providers to intervene sooner, resulting in a significant reduction in sepsis-related mortality rates.

In the realm of operational efficiency, Mount Sinai Health System in New York has implemented AI-driven tools to optimize scheduling and resource allocation. By analyzing patient flow and demand patterns, the system has improved appointment scheduling, reduced wait times, and enhanced overall patient satisfaction. This case illustrates how AI can streamline administrative processes and improve the patient experience.

These case studies underscore the importance of clinical validation and collaboration between AI developers and healthcare professionals. Successful AI implementations require a deep understanding of clinical workflows and the specific needs of healthcare providers. By fostering partnerships between technology companies and healthcare organizations, stakeholders can ensure that AI solutions are tailored to address real-world challenges.

The Future of AI in Healthcare

The future of AI in healthcare is both promising and uncertain. As technology continues to evolve, stakeholders must navigate a complex landscape of opportunities and challenges. Several trends are likely to shape the future of healthcare AI.

One significant trend is the increasing emphasis on personalized medicine. AI has the potential to analyze vast amounts of genetic and clinical data to tailor treatments to individual patients. For example, companies like Tempus are using AI to analyze genomic data and provide oncologists with insights into the most effective treatment options for cancer patients.

Another trend is the growing focus on interoperability. As healthcare organizations adopt various AI solutions, the need for seamless data exchange becomes paramount. Initiatives like the Fast Healthcare Interoperability Resources (FHIR) aim to standardize data formats and improve interoperability, enabling AI systems to access and analyze data from multiple sources.

Telemedicine is also expected to play a significant role in the future of healthcare AI. The COVID-19 pandemic accelerated the adoption of telehealth services, and AI can enhance these platforms by providing virtual health assistants, symptom checkers, and remote monitoring tools. This integration can improve access to care and empower patients to take an active role in managing their health.

However, the future of AI in healthcare is not without challenges. Regulatory frameworks will need to evolve to keep pace with technological advancements, ensuring that AI solutions are safe and effective. Additionally, addressing ethical concerns related to bias, privacy, and accountability will be crucial for building trust among patients and healthcare providers.

In conclusion, while the potential of AI in healthcare is immense, it is essential to approach its integration with caution and realism. By prioritizing patient safety, ethical considerations, and evidence-based practices, stakeholders can harness the benefits of AI while mitigating risks. The future of healthcare AI holds great promise, but it requires a collaborative effort to ensure that technology serves as a tool for improving patient care and outcomes.

Conclusion

The integration of AI into healthcare presents both opportunities and challenges. As experts warn against the hype surrounding AI, it is crucial for stakeholders to prioritize realistic expectations and ethical considerations. By understanding the current state of healthcare AI, recognizing the risks of overhyping its capabilities, addressing ethical implications, learning from successful case studies, and preparing for the future, healthcare organizations can navigate this complex landscape effectively.

Ultimately, the goal should be to leverage AI as a tool that enhances patient care, improves operational efficiency, and promotes equitable access to healthcare. By fostering collaboration between technology developers and healthcare professionals, we can ensure that AI serves as a valuable ally in the pursuit of better health outcomes for all.